Encapsulates information about distribution of panes on all processes and handles across pane communication for shared nodes, ghost nodes, and ghost cells. More...

#include <Pane_communicator.h>

Classes | |

| struct | Pane_comm_buffers |

| Buffers for outgoing and incoming messages of a specific pane to be communicated with another pane (either local or global). More... | |

Public Types | |

| enum | Buff_type { RNS, RCS, SHARED_NODE, GNR, GCR } |

Public Member Functions | |

| Pane_communicator (COM::Window *w, MPI_Comm c=MPI_COMM_WORLD) | |

| Constructor from a communicator. More... | |

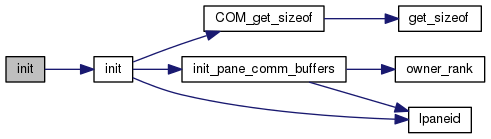

| void | init (void **ptrs, COM_Type type, int ncomp, const int *sizes=NULL, const int *strds=NULL) |

| Initialize the communication buffers. More... | |

| void | init (COM::Attribute *att, const COM::Attribute *my_pconn=NULL) |

| Initialize the communication buffers. More... | |

| MPI_Comm | mpi_comm () const |

| Obtain the MPI communicator for the object. More... | |

| std::vector< COM::Pane * > & | panes () |

| Obtains all the local panes. More... | |

| int | owner_rank (const int pane_id) const |

| Obtain the process rank of a given pane. More... | |

| int | total_npanes () const |

| Obtain the total number of panes on all processes. More... | |

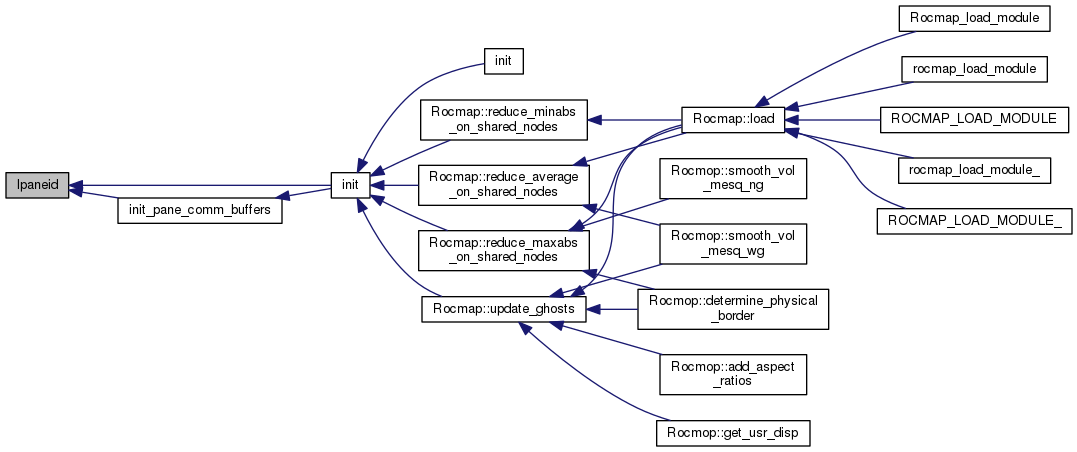

| int | lpaneid (const int pane_id) const |

| For a given pane, obtain an internal pane ID which is unique and contiguous across processes. More... | |

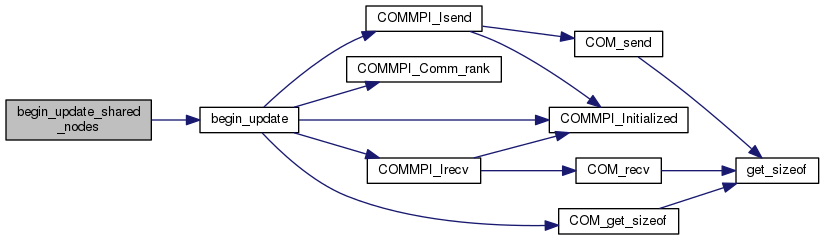

| void | begin_update_shared_nodes (std::vector< std::vector< bool > > *involved=NULL) |

| Initiates updating shared nodes by calling MPI_Isend and MPI_Irecv. More... | |

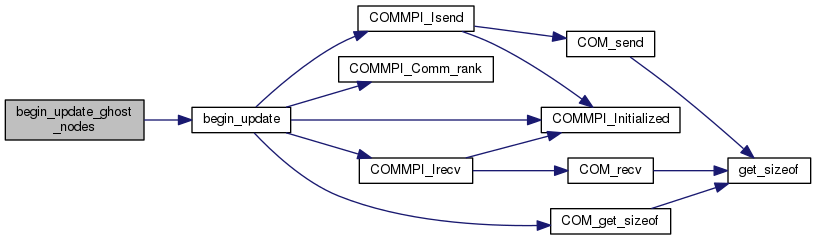

| void | begin_update_ghost_nodes () |

| Initiates updating ghost nodes by calling MPI_Isend and MPI_Irecv. More... | |

| void | begin_update_ghost_cells () |

| Initiates updating ghost nodes by calling MPI_Isend and MPI_Irecv. More... | |

| void | end_update_shared_nodes () |

| Finalizes shared node updating by calling MPI_Waitall on all send requests. More... | |

| void | end_update_ghost_nodes () |

| Finalizes ghost node updating by calling MPI_Waitall on all send requests. More... | |

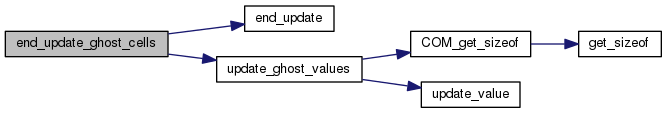

| void | end_update_ghost_cells () |

| Finalizes ghost cell updating by calling MPI_Waitall on all send requests. More... | |

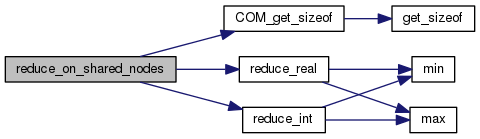

| void | reduce_on_shared_nodes (MPI_Op) |

| Perform a reduction operation using locally cached values of the shared nodes, assuming begin_update_shared_nodes() has been called. More... | |

| void | reduce_maxabs_on_shared_nodes () |

| Reduce to the value with the maximum absolute value using locally cached values of shared nodes. More... | |

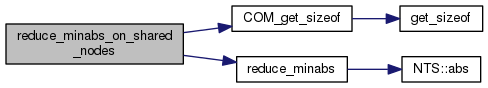

| void | reduce_minabs_on_shared_nodes () |

| Reduce to the value with the maximum absolute value using locally cached values of shared nodes. More... | |

| void | reduce_diff_on_shared_nodes () |

| Compute difference of non-zero values of each shared node, assuming there are at most two non-zero values. More... | |

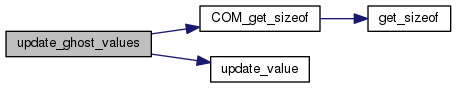

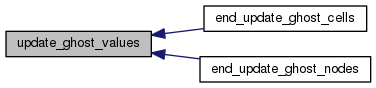

| void | update_ghost_values () |

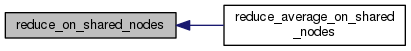

| void | reduce_average_on_shared_nodes () |

| Reduce to the average of values using locally cached values of the shared nodes, assuming begin_update_shared_nodes() has been called. More... | |

Protected Member Functions | |

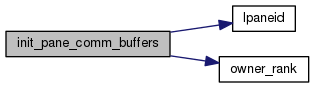

| void | init_pane_comm_buffers (std::vector< Pane_comm_buffers > &pcb, const int *ptr, int &index, const int n_items, const int lpid) |

| Initialize a Pane_comm_buffers for ghost information. More... | |

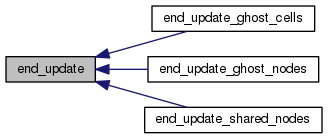

| void | end_update () |

| Finalizes updating by calling MPI_Waitall on all send requests. More... | |

| void | begin_update (const Buff_type btype, std::vector< std::vector< bool > > *involved=NULL) |

| Initiates updating by calling MPI_Isend and MPI_Irecv. More... | |

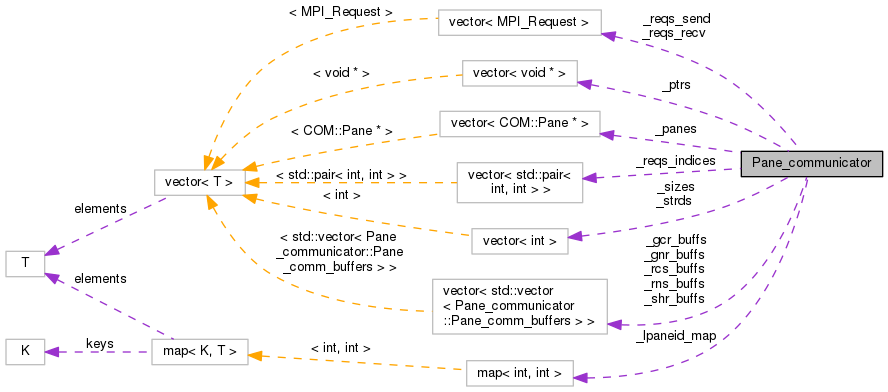

Protected Attributes | |

| int | _my_pconn_id |

| The id of the pconn being used. More... | |

| COM::Window * | _appl_window |

| Reference to the application window. More... | |

| const MPI_Comm | _comm |

| MPI Communicator. More... | |

| std::vector< COM::Pane * > | _panes |

| Vector of all local panes. More... | |

| int | _total_npanes |

| The total number of panes on all processes. More... | |

| std::map< int, int > | _lpaneid_map |

| Mapping from user-defined pane ids to internal IDs, which are unique and contiguous across all processes, which are useful for defining unique tags for MPI messages. More... | |

| int | _type |

| The base data type, number of components, and the number of bytes of all components for the data to be communicated. More... | |

| int | _ncomp |

| int | _ncomp_bytes |

| std::vector< void * > | _ptrs |

| An array of pointers to the data for all local panes. More... | |

| std::vector< int > | _sizes |

| The sizes of the arrays for all local panes. More... | |

| std::vector< int > | _strds |

| The strides of the arrays for all local panes. More... | |

| std::vector< std::vector < Pane_comm_buffers > > | _shr_buffs |

| Shared node pane communication buffers. More... | |

| std::vector< std::vector < Pane_comm_buffers > > | _rns_buffs |

| Buffer for real nodes to send. More... | |

| std::vector< std::vector < Pane_comm_buffers > > | _gnr_buffs |

| Buffer for ghost nodes to receive. More... | |

| std::vector< std::vector < Pane_comm_buffers > > | _rcs_buffs |

| Buffer for real cells to send. More... | |

| std::vector< std::vector < Pane_comm_buffers > > | _gcr_buffs |

| Buffer for ghost cells to receive. More... | |

| std::vector< MPI_Request > | _reqs_send |

| Arrays of pending nonblocking MPI requests. Same format as _shr_buffs. More... | |

| std::vector< MPI_Request > | _reqs_recv |

| std::vector< std::pair< int, int > > | _reqs_indices |

| The indices in buffs for each pending nonblocking receive request. More... | |

Private Member Functions | |

| Pane_communicator (const Pane_communicator &) | |

| Pane_communicator & | operator= (const Pane_communicator &) |

Encapsulates information about distribution of panes on all processes and handles across pane communication for shared nodes, ghost nodes, and ghost cells.

However,there can be no overlap among the 3 types of communication since they share the same set of tags and request buffers.

Definition at line 46 of file Pane_communicator.h.

| enum Buff_type |

| Enumerator | |

|---|---|

| RNS | |

| RCS | |

| SHARED_NODE | |

| GNR | |

| GCR | |

Definition at line 69 of file Pane_communicator.h.

|

explicit |

Constructor from a communicator.

Also initialize the internal data structures of the communicator, in particular the internal pane IDs.

Definition at line 38 of file Pane_communicator.C.

References _appl_window, _lpaneid_map, _my_pconn_id, _panes, _total_npanes, COM_PCONN, and i.

|

private |

|

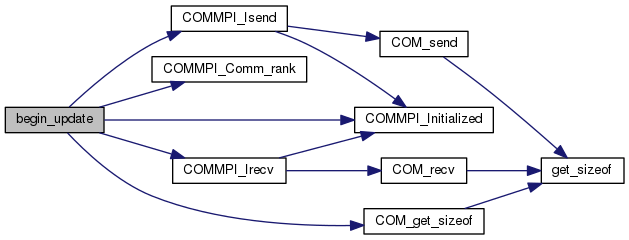

protected |

Initiates updating by calling MPI_Isend and MPI_Irecv.

See begin_update_shared_nodes for description of involved.

Definition at line 205 of file Pane_communicator.C.

References _comm, _gcr_buffs, _gnr_buffs, _my_pconn_id, _ncomp_bytes, _panes, _ptrs, _rcs_buffs, _reqs_indices, _reqs_recv, _reqs_send, _rns_buffs, _shr_buffs, _sizes, _strds, _total_npanes, _type, COM_assertion, COM_assertion_msg, COM_get_sizeof(), COMMPI_Comm_rank(), COMMPI_Initialized(), COMMPI_Irecv(), COMMPI_Isend(), GCR, GNR, i, Pane_communicator::Pane_comm_buffers::inbuf, Pane_communicator::Pane_comm_buffers::index, j, k, MPI_BYTE, MPI_COMM_SELF, n, nj, Pane_communicator::Pane_comm_buffers::outbuf, Pane_communicator::Pane_comm_buffers::rank, rank, RCS, RNS, SHARED_NODE, and Pane_communicator::Pane_comm_buffers::tag.

Referenced by begin_update_ghost_cells(), begin_update_ghost_nodes(), and begin_update_shared_nodes().

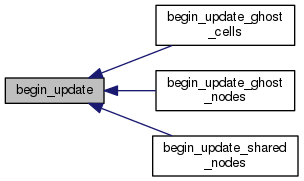

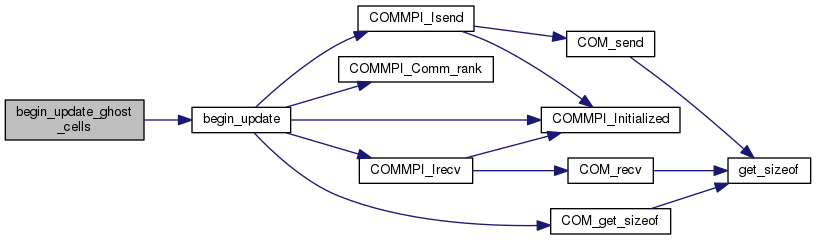

|

inline |

Initiates updating ghost nodes by calling MPI_Isend and MPI_Irecv.

Definition at line 135 of file Pane_communicator.h.

References begin_update(), GCR, and RCS.

|

inline |

Initiates updating ghost nodes by calling MPI_Isend and MPI_Irecv.

Definition at line 129 of file Pane_communicator.h.

References begin_update(), GNR, and RNS.

|

inline |

Initiates updating shared nodes by calling MPI_Isend and MPI_Irecv.

If involved is not NULL, then at exit it contains a vector of bitmaps, each of which corresponds to a local pane, and the size of each bitmap is the number of real nodes for its corresponding local pane. Upon exit, the bits corresponding to shared nodes are set to true. The information in involved is required to overlap communication and computation during reduction operations.

Definition at line 124 of file Pane_communicator.h.

References begin_update(), and SHARED_NODE.

|

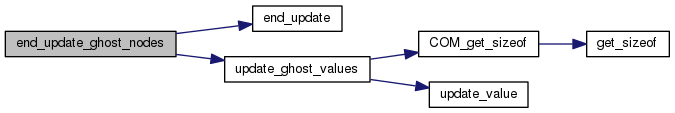

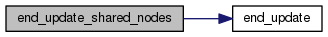

protected |

Finalizes updating by calling MPI_Waitall on all send requests.

Definition at line 351 of file Pane_communicator.C.

References _comm, _reqs_send, and COM_assertion.

Referenced by end_update_ghost_cells(), end_update_ghost_nodes(), and end_update_shared_nodes().

|

inline |

Finalizes ghost cell updating by calling MPI_Waitall on all send requests.

Definition at line 152 of file Pane_communicator.h.

References end_update(), and update_ghost_values().

|

inline |

Finalizes ghost node updating by calling MPI_Waitall on all send requests.

Definition at line 146 of file Pane_communicator.h.

References end_update(), and update_ghost_values().

|

inline |

Finalizes shared node updating by calling MPI_Waitall on all send requests.

Definition at line 141 of file Pane_communicator.h.

References end_update().

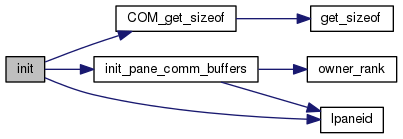

| void init | ( | void ** | ptrs, |

| COM_Type | type, | ||

| int | ncomp, | ||

| const int * | sizes = NULL, |

||

| const int * | strds = NULL |

||

| ) |

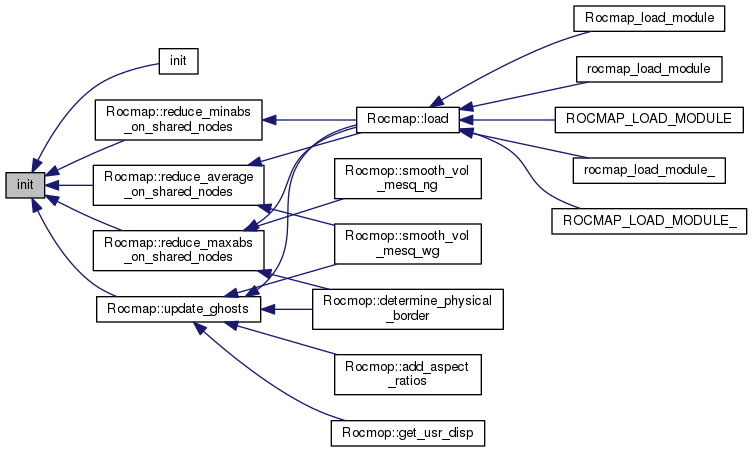

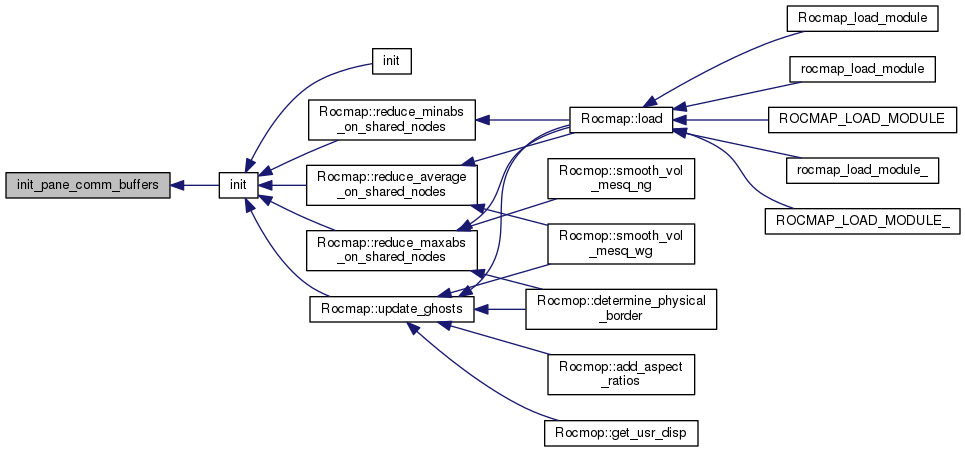

Initialize the communication buffers.

ptrs is an array of pointers to the data for all local panes type is the base data type (such as MPI_INT) ncomp is the number of component per node sizes is the number of items per pane. If null, then use number of nodes. strides are the strides for each array. If null, then use ncomp for all.

Definition at line 91 of file Pane_communicator.C.

References _gcr_buffs, _gnr_buffs, _my_pconn_id, _ncomp, _ncomp_bytes, _panes, _ptrs, _rcs_buffs, _rns_buffs, _shr_buffs, _sizes, _strds, _type, COM_get_sizeof(), i, init_pane_comm_buffers(), and lpaneid().

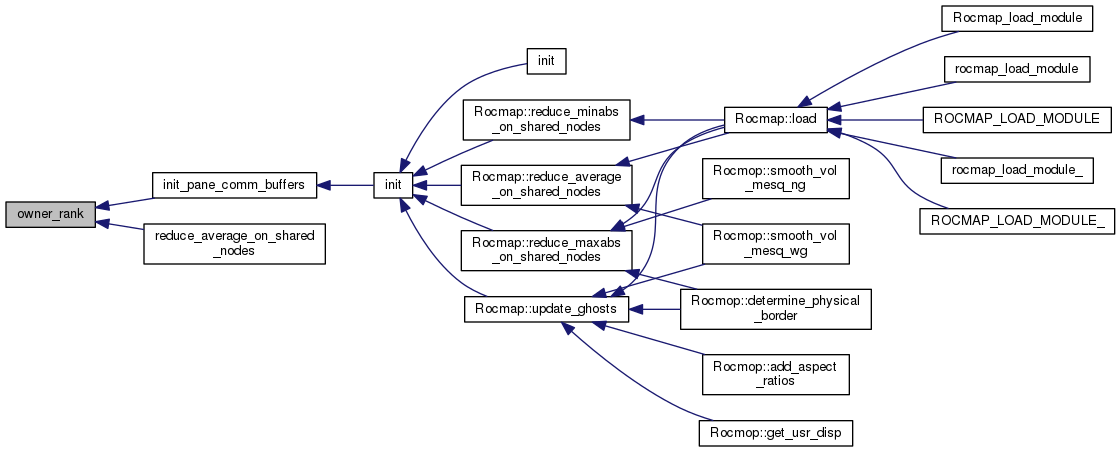

Referenced by init(), Rocmap::reduce_average_on_shared_nodes(), Rocmap::reduce_maxabs_on_shared_nodes(), Rocmap::reduce_minabs_on_shared_nodes(), and Rocmap::update_ghosts().

| void init | ( | COM::Attribute * | att, |

| const COM::Attribute * | my_pconn = NULL |

||

| ) |

Initialize the communication buffers.

att is a pointer to the attribute my_pconn stores pane-connectivity

Definition at line 56 of file Pane_communicator.C.

References _appl_window, _my_pconn_id, _panes, COM_assertion, COM_assertion_msg, COM_PCONN, i, and init().

|

protected |

Initialize a Pane_comm_buffers for ghost information.

Definition at line 168 of file Pane_communicator.C.

References _total_npanes, COM_assertion_msg, Pane_communicator::Pane_comm_buffers::index, j, lpaneid(), owner_rank(), Pane_communicator::Pane_comm_buffers::rank, and Pane_communicator::Pane_comm_buffers::tag.

Referenced by init().

|

inline |

For a given pane, obtain an internal pane ID which is unique and contiguous across processes.

Definition at line 112 of file Pane_communicator.h.

References _lpaneid_map, _total_npanes, and COM_assertion.

Referenced by init(), and init_pane_comm_buffers().

|

inline |

Obtain the MPI communicator for the object.

Definition at line 97 of file Pane_communicator.h.

References _comm.

|

private |

|

inline |

Obtain the process rank of a given pane.

If the pane does not exist, it will return -1.

Definition at line 104 of file Pane_communicator.h.

References _appl_window.

Referenced by init_pane_comm_buffers(), and reduce_average_on_shared_nodes().

|

inline |

Obtains all the local panes.

Definition at line 100 of file Pane_communicator.h.

References _panes.

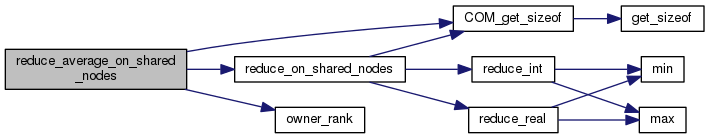

| void reduce_average_on_shared_nodes | ( | ) |

Reduce to the average of values using locally cached values of the shared nodes, assuming begin_update_shared_nodes() has been called.

Definition at line 758 of file Pane_communicator.C.

References _my_pconn_id, _ncomp, _panes, _ptrs, _strds, _type, COM_assertion_msg, COM_CHAR, COM_CHARACTER, COM_DOUBLE, COM_DOUBLE_PRECISION, COM_FLOAT, COM_get_sizeof(), COM_INT, COM_INTEGER, COM_REAL, i, j, k, MPI_SUM, owner_rank(), and reduce_on_shared_nodes().

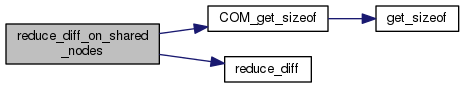

| void reduce_diff_on_shared_nodes | ( | ) |

Compute difference of non-zero values of each shared node, assuming there are at most two non-zero values.

Definition at line 623 of file Pane_communicator.C.

References _comm, _my_pconn_id, _ncomp, _ncomp_bytes, _panes, _ptrs, _reqs_indices, _reqs_recv, _shr_buffs, _strds, _type, COM_assertion, COM_assertion_msg, COM_CHAR, COM_CHARACTER, COM_DOUBLE, COM_DOUBLE_PRECISION, COM_FLOAT, COM_get_sizeof(), COM_INT, COM_INTEGER, COM_REAL, i, Pane_communicator::Pane_comm_buffers::inbuf, Pane_communicator::Pane_comm_buffers::index, j, k, nk, and reduce_diff().

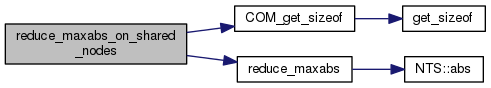

| void reduce_maxabs_on_shared_nodes | ( | ) |

Reduce to the value with the maximum absolute value using locally cached values of shared nodes.

Definition at line 497 of file Pane_communicator.C.

References _comm, _my_pconn_id, _ncomp, _ncomp_bytes, _panes, _ptrs, _reqs_indices, _reqs_recv, _shr_buffs, _strds, _type, COM_assertion, COM_assertion_msg, COM_CHAR, COM_CHARACTER, COM_DOUBLE, COM_DOUBLE_PRECISION, COM_FLOAT, COM_get_sizeof(), COM_INT, COM_INTEGER, COM_REAL, i, Pane_communicator::Pane_comm_buffers::inbuf, Pane_communicator::Pane_comm_buffers::index, j, k, nk, and reduce_maxabs().

| void reduce_minabs_on_shared_nodes | ( | ) |

Reduce to the value with the maximum absolute value using locally cached values of shared nodes.

Definition at line 560 of file Pane_communicator.C.

References _comm, _my_pconn_id, _ncomp, _ncomp_bytes, _panes, _ptrs, _reqs_indices, _reqs_recv, _shr_buffs, _strds, _type, COM_assertion, COM_assertion_msg, COM_CHAR, COM_CHARACTER, COM_DOUBLE, COM_DOUBLE_PRECISION, COM_FLOAT, COM_get_sizeof(), COM_INT, COM_INTEGER, COM_REAL, i, Pane_communicator::Pane_comm_buffers::inbuf, Pane_communicator::Pane_comm_buffers::index, j, k, nk, and reduce_minabs().

| void reduce_on_shared_nodes | ( | MPI_Op | op | ) |

Perform a reduction operation using locally cached values of the shared nodes, assuming begin_update_shared_nodes() has been called.

Definition at line 401 of file Pane_communicator.C.

References _comm, _my_pconn_id, _ncomp, _ncomp_bytes, _panes, _ptrs, _reqs_indices, _reqs_recv, _shr_buffs, _strds, _type, COM_assertion, COM_assertion_msg, COM_CHAR, COM_CHARACTER, COM_DOUBLE, COM_DOUBLE_PRECISION, COM_FLOAT, COM_get_sizeof(), COM_INT, COM_INTEGER, COM_REAL, i, Pane_communicator::Pane_comm_buffers::inbuf, Pane_communicator::Pane_comm_buffers::index, j, k, nk, reduce_int(), and reduce_real().

Referenced by reduce_average_on_shared_nodes().

|

inline |

Obtain the total number of panes on all processes.

Definition at line 108 of file Pane_communicator.h.

References _total_npanes.

| void update_ghost_values | ( | ) |

Definition at line 693 of file Pane_communicator.C.

References _comm, _gcr_buffs, _gnr_buffs, _my_pconn_id, _ncomp, _ncomp_bytes, _panes, _ptrs, _reqs_indices, _reqs_recv, _strds, _type, COM_assertion, COM_assertion_msg, COM_CHAR, COM_CHARACTER, COM_DOUBLE, COM_DOUBLE_PRECISION, COM_FLOAT, COM_get_sizeof(), COM_INT, COM_INTEGER, COM_REAL, GNR, i, Pane_communicator::Pane_comm_buffers::inbuf, Pane_communicator::Pane_comm_buffers::index, j, k, nk, and update_value().

Referenced by end_update_ghost_cells(), and end_update_ghost_nodes().

|

protected |

Reference to the application window.

Definition at line 196 of file Pane_communicator.h.

Referenced by init(), owner_rank(), and Pane_communicator().

|

protected |

MPI Communicator.

Definition at line 198 of file Pane_communicator.h.

Referenced by begin_update(), end_update(), mpi_comm(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

Buffer for ghost cells to receive.

Definition at line 227 of file Pane_communicator.h.

Referenced by begin_update(), init(), and update_ghost_values().

|

protected |

Buffer for ghost nodes to receive.

Definition at line 223 of file Pane_communicator.h.

Referenced by begin_update(), init(), and update_ghost_values().

|

protected |

Mapping from user-defined pane ids to internal IDs, which are unique and contiguous across all processes, which are useful for defining unique tags for MPI messages.

Definition at line 206 of file Pane_communicator.h.

Referenced by lpaneid(), and Pane_communicator().

|

protected |

The id of the pconn being used.

Definition at line 193 of file Pane_communicator.h.

Referenced by begin_update(), init(), Pane_communicator(), reduce_average_on_shared_nodes(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

Definition at line 209 of file Pane_communicator.h.

Referenced by init(), reduce_average_on_shared_nodes(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

Definition at line 209 of file Pane_communicator.h.

Referenced by begin_update(), init(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

Vector of all local panes.

Definition at line 200 of file Pane_communicator.h.

Referenced by begin_update(), init(), Pane_communicator(), panes(), reduce_average_on_shared_nodes(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

An array of pointers to the data for all local panes.

Definition at line 211 of file Pane_communicator.h.

Referenced by begin_update(), init(), reduce_average_on_shared_nodes(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

Buffer for real cells to send.

Definition at line 225 of file Pane_communicator.h.

Referenced by begin_update(), and init().

|

protected |

The indices in buffs for each pending nonblocking receive request.

Definition at line 231 of file Pane_communicator.h.

Referenced by begin_update(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

Definition at line 229 of file Pane_communicator.h.

Referenced by begin_update(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

Arrays of pending nonblocking MPI requests. Same format as _shr_buffs.

Definition at line 229 of file Pane_communicator.h.

Referenced by begin_update(), and end_update().

|

protected |

Buffer for real nodes to send.

Definition at line 221 of file Pane_communicator.h.

Referenced by begin_update(), and init().

|

protected |

Shared node pane communication buffers.

The outer vector corresponds to local panes; the inner vectors corresponds to the

Definition at line 219 of file Pane_communicator.h.

Referenced by begin_update(), init(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), and reduce_on_shared_nodes().

|

protected |

The sizes of the arrays for all local panes.

Definition at line 213 of file Pane_communicator.h.

Referenced by begin_update(), and init().

|

protected |

The strides of the arrays for all local panes.

Definition at line 215 of file Pane_communicator.h.

Referenced by begin_update(), init(), reduce_average_on_shared_nodes(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().

|

protected |

The total number of panes on all processes.

Definition at line 202 of file Pane_communicator.h.

Referenced by begin_update(), init_pane_comm_buffers(), lpaneid(), Pane_communicator(), and total_npanes().

|

protected |

The base data type, number of components, and the number of bytes of all components for the data to be communicated.

Definition at line 209 of file Pane_communicator.h.

Referenced by begin_update(), init(), reduce_average_on_shared_nodes(), reduce_diff_on_shared_nodes(), reduce_maxabs_on_shared_nodes(), reduce_minabs_on_shared_nodes(), reduce_on_shared_nodes(), and update_ghost_values().